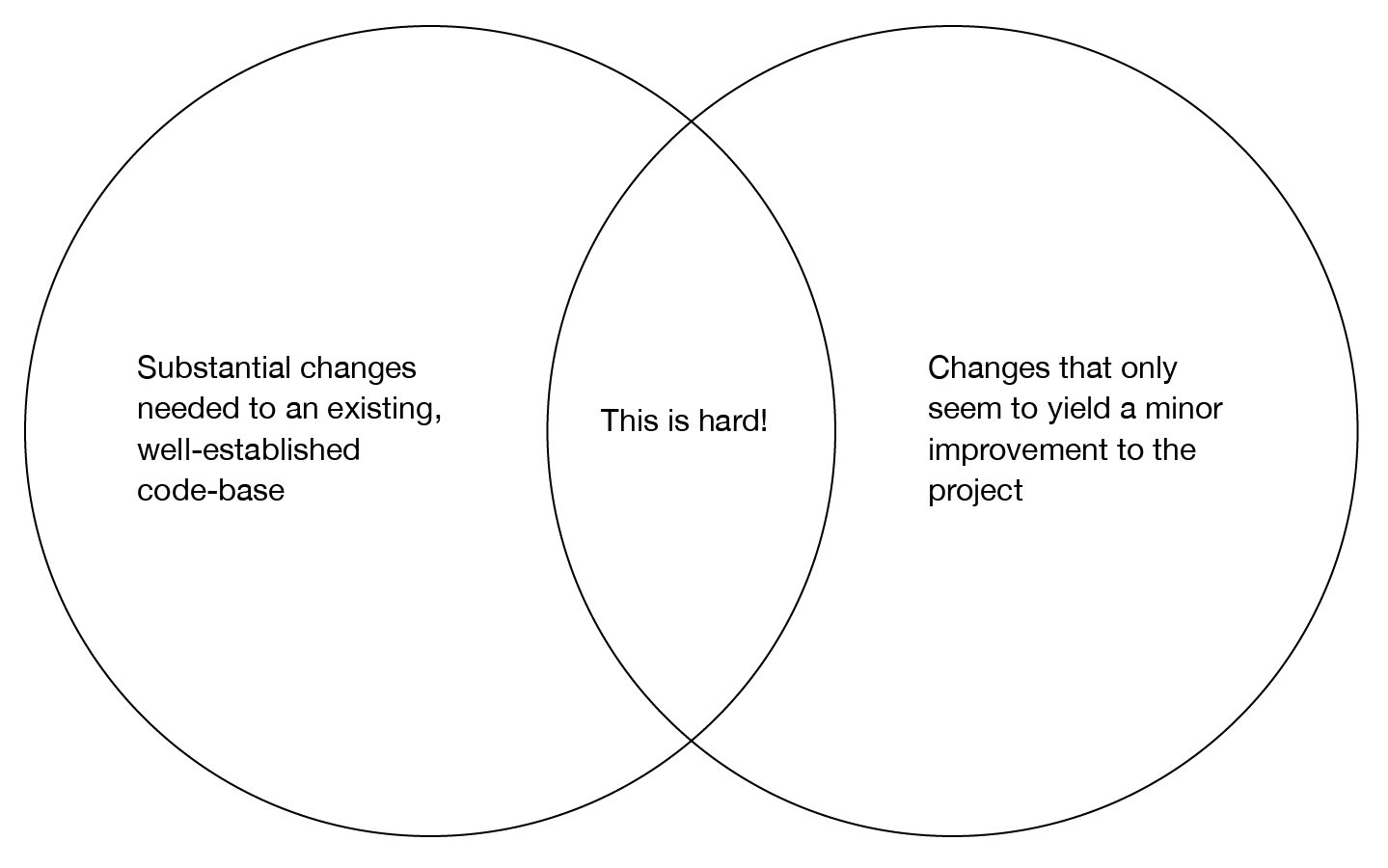

Open source coders can have a difficult time with particularly large pull requests. Many programmers work on open source only part-time and can have trouble setting aside the hours to properly investigate, test, evaluate or even read through a particularly meaty code contribution. Sometimes their employers don’t necessarily view it as being mission critical or don’t understand the purpose of improvements designed to enhance the experience of the entire community.

What’s more, the progress on any open source project can be difficult to predict. Advances can come in fits and starts. That can happen when you’re building any software, but it’s particularly difficult to manage open source contributors who may be giving their time and expertise as part of a passion project, to enhance their developer résumé, or just to flex their coding expertise and publicly prove their worth. Every open source contributor develops code for his or her own reasons, and getting alignment on release schedules can be particularly challenging, especially if you are not in close contact with the maintainers of whatever project you are working on.

I saw this problem firsthand as I worked with the F# team. None of the people responsible for the project had looked into our PR in depth and it became problematic when we tried to get anything merged.

So, in early November 2022, we organised a closed virtual call with the F# team, walking them through everything we had done so far. It was useful to get the team up to speed and we got some insight of things we still needed to address.

In January 2023, we found ourselves in a similar situation, where the PR was large, and we wanted to get a proper review from the team. Since there were many new people in the F# team, it was challenging to get a good review from them. As they themselves were new to a lot of things, reviewing this properly was perhaps a little early for them.

Luckily, I was invited to speak at the NDC London conference near the end of January, where I spoke about my work in F#. While I was at NDC, I met again with Don Syme, where I asked him to look over my code.

It felt like a bold move. I was advocating for my code contribution directly to the person most responsible for the entire project. But Don’s gracious on-the-spot review sparked the rest of the F# team to take note and finally give our code a close look.

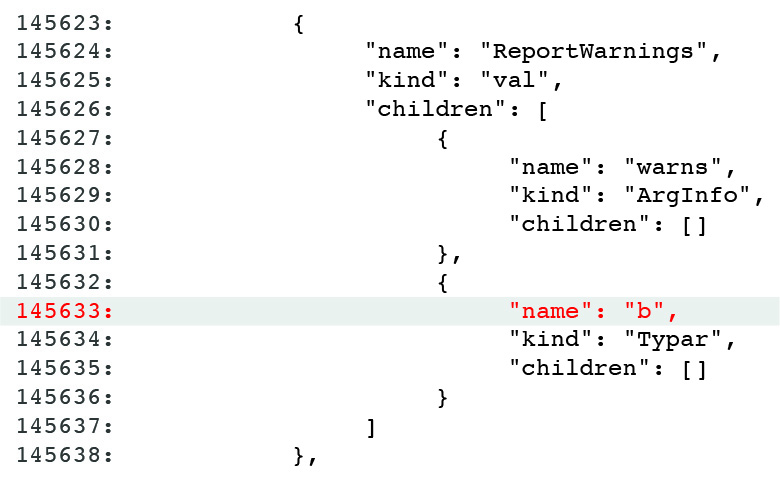

In February, the PR got merged behind a feature flag. This was a great achievement but it still had a flaw. We discovered that the result of the compilation was not entirely deterministic. If you work anywhere around computers, you know exactly how bad this is. It means that each time we ran the code, we could not be certain we would get the same output from the same inputs. No good.